Technology Pushes Data Center Cooling to Edge (and Beyond)

By DAVID KLUSAS

Vertiv

Columbus OH

The demand for data has never been greater. One could quite likely repeat that phrase every day for the foreseeable future and never be wrong.

Data management is growing at both ends of the spectrum — smaller, stand-alone data centers at the edge of the network, and hyperscale facilities that are more than 10 MW in size. Both rely on thermal management to keep critical electronic systems up and running, but their needs are as different as their size.

Keeping cool on the edge

Broader industry and consumer trends are driving edge growth, including the Internet of Things (IoT) and 5G networks designed to deliver powerful, low-latency computing closer to the end-user. Micro-data centers — sometimes converted rooms, closets or storage areas — are expected to be a $6.3 billion industry by 2020, according to a report by MarketsAndMarkets.

For most businesses, the edge is considered mission critical as it drives revenue and is where most data is stored. In recent Vertiv edge research, IT, facilities and data center managers cited insufficient cooling capacity as their top concern in edge spaces, followed by service and maintenance, improved monitoring, hot spots and insufficient power capacity.

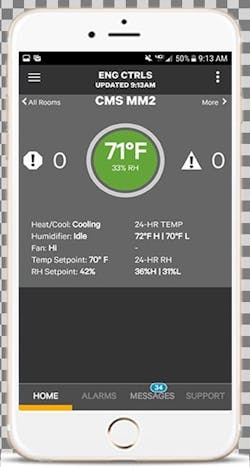

Building air conditioning typically is not sufficient to provide the level of cooling required to cool today’s advanced electronics and ensure network availability. In most cases, that’s not a risk worth taking. Depending on site needs, dedicated edge cooling systems will address airflow, temperature and humidity 365 days a year. In some cases, remote system monitoring and controls, along with simplified integration into building management systems (BMS) are available. In a best-case scenario, the remote monitoring system will use an IoT smart device app or online portal to allow the system to be viewed and managed from virtually anywhere.

The focus on easy-to-install solutions is increasing, and a variety of cooling options based on site limitations and budgets have evolved to meet the needs of this market. Because space is typically at a premium, some systems may be mounted above dropped ceilings or on walls.

The use of mini-split units has increased in this space, in part due to increased efficiency offerings through the application of variable capacity compressors and fans, along with traditional wall-mounted, ceiling mounted, perimeter and row-based units. New rack cooling systems – literally designed to fit into server racks – recently have been introduced for edge applications, efficiently delivering 3.5 kW of cooling with various heat rejection options for room or ceiling plenum discharge, or a split system using a refrigerant loop and outdoor condenser.

Larger centers, more efficient, economical

Five years ago, a three-megawatt data center was considered large. No longer. Mega-projects more than 10 megawatts in size are becoming the norm, with colocation and hyperscale customers focusing on using standardized or high-volume custom solutions with common design platforms across their projects. This lowers initial costs, enhances speed to market and mitigates risk.

Another trend is toward multi-story data centers, reducing the amount of potentially expensive real estate needed in areas where these data centers are concentrated, such as Northern Virginia, Northern California’s Bay Area, and North Texas. This makes some cooling technologies more applicable than others, such as pumped refrigerant and chilled water.

Finally, the use of non-raised floor environments combined with hot-aisle containment is growing rapidly as customers attempt to drive cost out of their initial builds. This makes it less expensive to configure initially, but greatly changes the cooling profile for the space and introduces complexities in getting the cooling to where you need it in the room. In a slab floor environment, the operator no longer has the ability to just change floor tiles to increase airflow or cooling capacity in certain areas of the data center. Advanced thermal controls with integration between rack sensors and cooling units become critical to making the entire system work effectively and efficiently.

Visitors to data centers in the recent past may recall being a bit chilly and donning a sweater or jacket. In most data centers now being designed and constructed, it’s not quite as cold inside anymore — ASHRAE 2016 thermal guidelines revised the upper end of the recommended range from 18°C (64.4°F) to 27°C (80.6°F). Increasing the supply temperatures allows for additional technologies to be applicable for cooling data centers, but reliability remains the top priority of any thermal management system while striving to lower operating costs and a reduction in capital expenditure (CAPEX).

Going dry remains cool and efficient

The reduction or complete elimination of water usage for data center cooling remains a hot topic and a strong driver for data center owners when selecting cooling technologies. Additionally, the cost of water treatment , water costs or availability of water may also be prohibitive for these types of technology.

Many large data centers are turning to water-free cooling systems to eliminate water usage and to support their organization’s sustainability goals. Today’s water-free economization systems are significantly more efficient than past traditional direct expansion (DX) and chilled water systems, with new pumped refrigerant systems demonstrating annual mechanical power usage effectiveness (PUE) between 1.05 – 1.20.

Pumped refrigerants grow

Pumped refrigerant economization systems have become increasingly popular as customers strive for highly efficient, easy to use cooling. These waterless systems, some of which have recently been optimized to boost energy savings by 50 percent, also can save approximately 6.75 million gallons of water per year for a 1MW data center, compared to a chilled water cooling system. These systems save energy by utilizing a refrigerant pump rather than compressors in low to moderate ambient conditions. The pumps consume roughly five percent of the energy the compressors would, thus reducing overall system energy while still maintaining the required cooling.

Particularly useful in today’s common design platforms where units are purchased by the hundreds, pumped refrigerant systems allow data centers to add capacity without the need for additional chillers or cooling towers. These systems optimize operation based on actual outdoor ambient temperatures and IT load rather than defined fixed outdoor temperatures setpoints. This allows the data center to capture 100 percent of potential economization hours.

Pumped refrigerant systems provide a consistent data center environment by virtue of the physical separation of heat rejection air and data center without cross-contamination or transfer of humidity. When utilized in a package system and deployed external to the building, air leakage can be significantly reduced (< 1%) under normal operating conditions and will experience no volumetric displacement, commonly associated with other dry economization systems.

Cooling IT — naturally

As supply temperatures are increased and humidity requirements are relaxed, alternative cooling options such as direct evaporative cooling systems are being deployed in large, hyperscale environments. A direct evaporative cooling system uses cool external air as the primary means of cooling throughout most of the year. During periods of higher ambient conditions, the system employs a wetted media pad to cool the incoming air. Mechanical “trim” cooling in the form of DX or chilled water is sometimes added to these systems depending on the data center location and desired data center conditions.

Direct evaporative cooling offers sustainable and financial benefits such as low annual water usage, reduced energy consumption with annual mechanical PUE levels often less than 1.10 and an overall lower peak power requirement compared to compressor based systems. But a system such as this is not for all data centers as the reliability model is different and requires a much wider allowable operating window for temperature and humidity to work effectively.

Back to the future

As advanced applications, such as facial recognition and advanced data analytics, emerge and evolve, server processor power requirements will continue to increase as well. That means raising the bar in terms of cooling. Certainly, pumped refrigerant and free-cooling methods will continue to evolve to deliver higher efficiency and lower costs, but new cooling options, currently in development, will likely play a significant role. High-power processors will require innovative thermal management approaches, such as direct cooling at the chip. This involves partially or fully immersing the processor or other components in a liquid for direct heat dissipation to improve server performance, high density efficiency and lowering cooling costs.

Bottom line? Cooling remains a mission critical component for data centers and IT spaces, regardless of size. The key to cooling success involves choosing the right application — with an eye toward the future — and taking full advantage of modern control systems that enable remote monitoring to enhance uptime, all while saving energy.

Based in Columbus OH, the author is Vertiv’s director of Global Offerings, Custom and Large Systems, for mission critical thermal management solutions. Formerly part of Emerson Electric, Vertiv became its own entity in December 2016.