Data-Center Uptime & Energy Efficiency

Editor's note: The following is based on "Reducing Data-Center Energy Use Without Compromising Uptime," presented by Don Beaty, PE, FASHRAE, during HPAC Engineering's seventh annual Engineering Green Buildings Conference, held Sept. 23 and 24 in Baltimore as part of HVACR Week 2010.

Many data centers are "mission-critical," meaning essential to the core function of an organization. As such, they must remain operational at all times. However, that does not mean significant improvements in energy efficiency and sustainability cannot be made.

The keys to conserving energy in data centers without impacting uptime are:

- Selecting optimum design conditions.

- Developing practical and effective load characterization.

- Treating power and cooling as a service, rather than physical infrastructure.

This article focuses on balancing energy efficiency with demands for uptime, as well as the gains possible with an American Society of Heating, Refrigerating and Air-Conditioning Engineers- (ASHRAE-) driven basis for design.

Selecting Optimum Design Conditions

Data centers commonly are multivendor information-technology (IT) environments, with each vendor having its own environmental (e.g., temperature and humidity) specifications. The net result is no standardization and arbitrary decisions about operating temperatures, such as, "Cold is better." Basically, temperatures are selected based on the worst-case scenario, which means overdesign.

Recognizing a lack of standardization, IT-equipment manufacturers selected ASHRAE to provide a platform for standardizing their environmental specifications. That initiated the creation of ASHRAE Technical Committee (TC) 9.9, Mission Critical Facilities, Technology Spaces and Electronic Equipment.

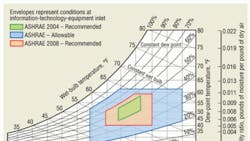

ASHRAE environmental specifications. In 2004, ASHRAE TC 9.9 published the book "Thermal Guidelines for Data Processing Environments."1 Critical to the effort was the inclusion of all major IT-equipment manufacturers and their agreeing on sets of "recommended" and "allowable" conditions. Prolonged exposure of operating equipment to conditions outside of the recommended range can result in decreased reliability and longevity. Occasional, short-term excursions into the allowable envelope may be acceptable.

It is important to note:

- The ASHRAE environmental specifications are measured at the air inlet of equipment.

- The ASHRAE environmental specifications are retroactive. In other words, they also apply to legacy equipment.

- The ASHRAE environmental specifications are within the range each major IT-equipment manufacturer used in the past.

- Operating within the recommended range produces a reliable environment.

The 2004 specifications were 68°F to 77°F and 40-percent to 55-percent relative humidity (RH).

In 2008, ASHRAE TC 9.9 revised the environmental specifications,2 widening the range and enabling more hours for economizers. The dry-bulb lower limit was decreased to 64.4°F, while the upper limit was increased to 80.6°F. The lower moisture limit was decreased to a 41.9°F dew point, while the upper limit was increased to 60-percent RH and a 59°F dew point (Figure 1).

IT equipment is expected to function for short to moderate periods of time outside of the recommended range, but within the allowable range. This may be all that is necessary to accomplish a "compressorless design," which can have a significant impact on energy use, maintenance, and capital costs.

IT equipment typically has a much higher cost than power and cooling infrastructure. Operating this equipment outside of manufacturers' recommendations may be risky. The best approach is to achieve with stakeholders consensus on the frequency and duration of excursions into the allowable range.

Future changes to ASHRAE environmental specifications. ASHRAE TC 9.9's IT-equipment-manufacturer subcommittee is pursuing expansion of the environmental envelope. This requires research and confirmation of the consequences of reducing humidity stringency and temperatures.

With continued reduction of chip form factor, concerns about contamination attributed to decreased separation of lines on chips increase.

Developing Practical and Effective Load Characterization

Server/IT-equipment nameplate data (Figure 2) should not be used when sizing equipment because its intent is for regulatory/safety purposes, not load determination.

"Thermal Guidelines for Data Processing Environments"2 provides a unified approach to reporting actual load: the thermal report (Figure 3). Something similar to these reports is used by Energy Star for servers.

In a thermal report, multiple configurations of IT equipment are shown, as configuration affects power and cooling requirements.

It is important that a thermal report be requested for all IT equipment.

If nameplate data are used, the net result will be excessive capacity, which leads to oversized equipment and wasted energy. Using data from figures 2 and 3:

- 920 w (nameplate) ÷ 600 w (actual) = 1.53, or 53 percent more cooling

- 920 w (nameplate) ÷ 450 w (actual) = 2.04, or 104 percent more cooling

If such sizing mismatches occur across all IT equipment, the impact will be significant. Proper equipment sizing prevents stranded assets and energy-efficiency opportunities.

Treating Power and Cooling as a Service

The main customer of a data center is IT equipment. The customers of IT equipment are applications.

ASHRAE defines the environmental conditions to be measured at the air inlet of IT equipment. The conditions upstream are operator spaces (e.g., aisles for operators to access the equipment). Overall performance, as well as energy performance, is best served by considering anything upstream of the air inlet of IT equipment the domain of service providers.

Treating power and cooling as a service, with the interface point being IT equipment, lends great clarity to bases of design. This saves energy by delivering service conditions at points of use. Facilities operators have the freedom to adjust their systems and operations to perform in the most energy-efficient manner possible while continuing to meet the terms of service-level agreements (SLAs).

A SLA allows visibility between a customer and service provider. IT-equipment requirements and services can be defined best in a SLA.

Figure 4 shows a sample cooling SLA based on ASHRAE environmental specifications. Figure 5 shows a sample of a redundancy SLA, one addressing redundancy at a zone level. Data centers have many applications. Those applications can vary greatly in required level of redundancy. With areas broken into zones, energy can be saved without missions being compromised.

Redundancy and part load. Figure 6 shows that designing for ultimate load with no redundancy results in 100-percent utilization of components. Typically, the higher the utilization rate, the greater the energy efficiency. The likelihood uptime will be compromised in the event of equipment failure also increases with utilization rate, however. Whether to add redundancy—and how much (e.g., N+1, 2N, 2[N+1])—then, becomes a trade-off decision.

Planning using zones. With areas broken into zones, different cooling strategies can be deployed across a data center.

Using zones can prevent the possibility of an insufficient amount of cooling being provided to extremely dense configurations. This provides flexibility and the ability to provision to scale.

Figure 7 shows a space broken into zones. Table 1 shows zones configured differently. Having a data center organized into zones can save energy, maintenance, and capital costs.

Capacity Planning

Properly measuring and monitoring various metrics within a facility will ensure the right decisions with regard to efficiency are made.

By profiling the loads of zones or clusters, one can get a holistic view of whether a layout is optimized for specific demands. Ideally, all zones or clusters within a facility operate at or near full load for most of the year. This reduces inefficiencies in distribution equipment.

Data gathering helps stakeholders to understand the current and past state of a data center and plan for the future.

Modularity allows delayed deployment and flexibility in deployment strategies. This allows variation in installation time (sooner for the first module, compared with an entire system) and prevents excessive equipment operation. Modules can be expanded more easily than they can by retrofitting the central plant. Lastly, modularity allows capital-expenditure investments to be delayed until a certain capacity is required.

Life-Cycle Mismatch and Rightsizing

Data centers must support multiple generations of IT equipment, as power- and cooling-infrastructure lifetimes often are 15 to 25 years vs. only three to five years for IT equipment. Thus, data-center and IT-equipment planning must consider:

- Day 1 vs. ultimate design density.

- Energy efficiency and sustainability.

- Carbon cap-and-trade potential.

- Capital expenditures and/or total cost of ownership (TCO).

- Project location, climate, and utility capacity.

- The ability to upgrade without compromising reliability or availability.

Master planning is done at all levels of deployment, including electrical, mechanical, and IT.

Capacity planning leads to rightsizing. Rightsizing involves deciding what components and infrastructure should be provisioned to meet a data center's needs.

Encapsulated Energy

Power draw represents an estimated 19 percent of the energy used by a typical personal computer. The remaining 81 percent is represented by raw-materials processing, manufacturing, transporting, and disposal. As a result, IT-equipment manufacturers are transitioning away from disposable equipment.

Equipment packaging can be designed to optimize airflow through a device. Choosing easily upgradable packaging can yield tremendous benefits. Meanwhile, swapping components can save significant amounts of encapsulated energy.

Energy efficiency must be considered on a cradle-to-grave basis.

Conclusion

Unfortunately, no single plan for balancing uptime and energy efficiency for mission-critical facilities exists. Proper balancing varies by facility and requires an intense engineering analysis.

By understanding the operating environment and actual energy demands of a facility, one can make informed decisions regarding various trade-offs to achieve the right balance of uptime and energy efficiency.

References

- ASHRAE. (2004). Thermal guidelines for data processing environments. Atlanta: American Society of Heating, Refrigerating and Air-Conditioning Engineers.

- ASHRAE. (2009). Thermal guidelines for data processing environments (2nd ed.). Atlanta: American Society of Heating, Refrigerating and Air-Conditioning Engineers.

The founder and president of DLB Associates, provider of program-management, architectural/engineering, and construction-management services, Don Beaty, PE, FASHRAE, chaired American Society of Heating, Refrigerating and Air-Conditioning Engineers Technical Committee (TC) 9.9, Mission Critical Facilities, Technology Spaces and Electronic Equipment, from its inception until June 2006. Currently the publications chair of TC 9.9, he has presented on data-center topics in more than 20 countries and published more than 50 technical papers and articles. He is a licensed engineer in more than 40 states and a longtime member of HPAC Engineering’s Editorial Advisory Board.