Data centers are among the most energy-intensive types of facilities, and they are growing dramatically in terms of size and energy intensity.1 As a result, there has been increasing interest on the part of stakeholders—ranging from data-center managers to policymakers—over the last few years to improve data-center energy efficiency. Several industry and government organizations have developed tools, guidelines, and training programs to help work toward this goal.

There are many opportunities to reduce energy use in data centers, and benchmarking studies reveal a wide range of efficiency practices. Data-center operators may not be aware of how efficient their facilities are relative to those of their peers, even those with similar service levels. Benchmarking is an effective way to compare one facility to another and track the performance of a given facility over time.

Toward that end, this article will present key metrics that facility managers can use to assess, track, and manage the efficiency of the infrastructure systems in data centers and thereby identify potential efficiency actions. Most of the benchmarking data presented in this article are drawn from the data-center benchmarking database at Lawrence Berkeley National Laboratory (LBNL). The database was developed from studies commissioned by the California Energy Commission, Pacific Gas and Electric Co., the U.S. Department of Energy, and the New York State Energy Research and Development Authority.

DATA-CENTER INFRASTRUCTURE EFFICIENCY

Data center infrastructure efficiency (DCIE) is the ratio of information-technology- (IT-) equipment energy use to total data-center energy use. DCIE can be calculated for annual site energy, annual source energy, or electrical power. For example:

DCIEsite = IT site energy use ÷ total site energy use

DCIEsource = IT source energy use ÷ total source energy use

DCIEelecpower = IT electrical power ÷ total electrical power

Data-center energy use is the sum of all of the energy used by a data center, including campus chilled water and steam, if present. An online data-center energy profiler, available at http://dcpro.ppc.com, can be used to assess DCIE for site and source energy.2

DCIE provides an overall measure of the infrastructure efficiency (i.e., lower values relative to the peer group suggest higher potential to improve the efficiency of the infrastructure systems, such as HVAC, power distribution, and lights, and vice versa). It is not a measure of IT efficiency. Therefore, a data center that has a high DCIE still may have major opportunities to reduce overall energy use through IT efficiency measures, such as virtualization. DCIE is influenced by climate and tier level. Therefore, the potential to improve it may be limited by those factors. Some data-center professionals prefer to use the inverse of DCIE, known as power-utilization effectiveness, but both metrics serve the same purpose.

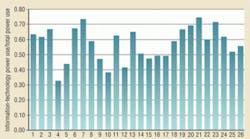

The benchmarking data in the LBNL database show a DCIEelecpower range from just over 0.3 to 0.75 (Figure 1). Some data centers are capable of achieving 0.9 or higher.3

TEMPERATURE AND HUMIDITY RANGES

American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) guidelines provide a range of allowable and recommended supply temperatures and humidity at the inlet to IT equipment.4

The recommended temperature range is between 64˚F and 80˚F, while the allowable is 59˚F to 90˚F (Figure 2). A low supply-air temperature and a small temperature differential between supply and return typically indicate the opportunity to improve air management, raise supply-air temperature, and thereby reduce energy use. Air management can be improved through better isolation between cold and hot aisles using blanking panels and strip curtains, the optimization of supply-diffuser and return-grille configuration, better cable management, blocked gaps in floor tiles, etc.

The ASHRAE-recommended humidity ranges from a dew point of 42˚F to a dew point of 59˚F, with 60-percent relative humidity (Figure 3). The allowable relative-humidity range is between 20 and 80 percent with a maximum dew point of 63˚F. A small, tightly controlled relative-humidity range suggests opportunities to reduce energy use, especially if there is active humidification and dehumidification. Centralized active control of humidification units reduces conflicting operations between individual units, improving energy efficiency and capacity.

Because temperature and humidity affect the reliability and life of IT equipment, any changes to air management and temperature and humidity settings should be evaluated with metrics, such as Rack Cooling Index, which can be used to assess the thermal health of IT equipment.5 Many data centers operate well without active humidity control. While humidity control is important for physical media, such as tape storage, it generally is not critical for data-center equipment. Studies by LBNL and the Electrostatic Discharge Association suggest that humidity may not need to be controlled as tightly.

RETURN TEMPERATURE INDEX

The Return Temperature Index (RTI) is a measure of energy performance resulting from air management.6 The primary purpose of improving air management is to isolate hot and cold air streams. This allows elevation of supply and return temperatures and maximizes the difference between them while keeping inlet temperatures within ASHRAE-recommended limits. It also allows reduction of system airflow rate. This strategy allows HVAC equipment to operate more efficiently. RTI is ideal at 100 percent, at which return-air temperature is the same as the temperature of the air leaving IT equipment (Figure 4).

RTI also is a measure of the excess or deficit of supply air to server equipment. An RTI value of less than 100 percent indicates that some supply air is bypassing racks, while a value greater than 100 percent indicates recirculation of air from a hot aisle. An RTI value can be improved through better air management.

UPS LOAD FACTOR

Uninterruptible-power-supply (UPS) load factor is the ratio of UPS load to the design value of its capacity, providing a measure of UPS system oversizing and redundancy. A high metric value indicates a more efficient system. UPS load factors below 0.5 may indicate an opportunity for efficiency improvements, although the extent of the opportunity is highly dependent on the required redundancy level (Figure 5). Load factor can be improved in several ways, including:

- Shutting down some UPS modules when the redundancy level exceeds N + 1 or 2N.

- Installing a scalable/modular UPS.

- Installing a smaller UPS size to fit present load capacity.

- Transferring loads among UPS modules to maximize load-factor percent per active UPS.

UPS SYSTEM EFFICIENCY

UPS system efficiency is the ratio of UPS input power to UPS output power. UPS efficiency varies depending on its load factor. Therefore, the benchmark for this metric depends on the load factor of a UPS system. When UPS load factor is below 40 percent, a system can be highly inefficient because of no-load losses. Figure 6 shows a range of UPS efficiencies from factory measurements of different topologies. Figure 7 shows UPS efficiencies for data centers in the LBNL database. These measurements, taken several years ago, illustrate that efficiencies can vary considerably. Manufacturers claim that improved efficiencies now are available. When selecting UPS systems, it is important to evaluate performance over the expected loading range.

Selection of more efficient UPS systems, especially ones that perform well at expected load factors (e.g., below 40 percent), improves energy savings. For non-critical IT work, bypassing a UPS system using factory-supplied hardware and controls may be an option. Reducing redundancy levels by using modular UPS systems also improves efficiency.

COOLING-SYSTEM EFFICIENCY

The key metrics and benchmarks for evaluating the efficiency of cooling systems in data centers are no different than those typically used in other commercial buildings. These include chiller-plant efficiency (kilowatt per ton) and pumping efficiency (horsepower per gallon per minute). Figure 8 shows cooling-plant efficiency for LBNL-benchmarked data centers. Based on data from the LBNL database, 0.8 kw per ton could be considered a good practice benchmark, while 0.6 kw per ton could be considered a better practice benchmark.

AIR-SIDE-ECONOMIZER UTILIZATION

Air-side-economizer utilization characterizes the extent to which an air-side-economizer system is being used to provide “free” cooling. It is defined as the percentage of hours in a year that an economizer system can be in full or complete operation (i.e., without any cooling being provided by the chiller plant). The number of hours that an air-side economizer is being utilized could be compared with the maximum hours possible for the climate in which the data center is located. This can be determined from simulation analysis. For example, Figure 9 shows results from a simulation of four different climate conditions. The Green Grid, a global consortium dedicated to developing and promoting data-center energy efficiency, has developed a tool to estimate savings from air- and water-side free cooling, although the assumptions are different than those used for the results presented in Figure 9.8

SUMMARY

Data-center operators can use the metrics discussed in this article to track the efficiency of their infrastructure systems. The system-level metrics in particular allow operators to help identify potential efficiency actions. Data from the LBNL benchmarking database show a wide range of efficiency across data centers.

DCIE is gaining increasing acceptance as a metric for overall infrastructure efficiency and can be computed in terms of site energy, source energy, or electrical load. However, DCIE does not address the efficiency of IT equipment. Organizations, such as The Green Grid, are working to develop productivity metrics (e.g., data-center energy productivity) that will characterize the work done per unit of energy. The challenge is to categorize the different kinds of work done in a data center and identify appropriate ways to measure them. As those metrics become available, they will complement the infrastructure metrics described in this article.

REFERENCES

- U.S. EPA. (2007). Report to Congress on server and data center energy efficiency public law 109-43. Washington, DC: U.S. Environmental Protection Agency.

- U.S. DOE. Data center energy profiler. (n.d.). Retrieved from http://dcpro.ppc.com

- Greenberg, S., Khanna, A., & Tschudi, W. (2009, June). High performance computing with high efficiency. Paper to be presented at the 2009 ASHRAE Annual Conference, Louisville, KY.

- ASHRAE. (2008). 2008 ASHRAE environmental guidelines for datacom equipment. Atlanta: American Society of Heating, Refrigerating and Air-Conditioning Engineers.

- Herrlin, M.K. (2005). Rack cooling effectiveness in data centers and telecom central offices: The Rack Cooling Index (RCI). ASHRAE Transactions, 111, 725-731.

- Herrlin, M.K. (2007, September). Improved data center energy efficiency and thermal performance by advanced airflow analysis. Paper presented at the Digital Power Forum, San Francisco, CA.

- Syska Hennessy Group. The use of outside air economizers in data center environments. (n.d.) Retrieved from http://www.syska.com/thought/whitepapers/wpabstract.asp?idWhitePaper=14

- The Green Grid. Free cooling estimated savings calculation tool. (n.d.) Retrieved from http://cooling.thegreengrid.org/calc_index.html

Paul Mathew, PhD, is a staff scientist; Steve Greenberg, PE, is an energy-management engineer; Srirupa Ganguly is a research associate; Dale Sartor, PE, is the applications team leader; and William Tschudi, PE, is a program manager for Lawrence Berkeley National Laboratory.