Creating Energy-Efficient, Low-Risk Data Centers

This an exciting time for the data-center industry, which is experiencing a great deal of change. However, data-center operators do not necessarily like excitement; they like to keep things calm and low-risk. They want to maintain a steady, predictable, tightly controlled environment for their sensitive and valuable equipment.

In 2004, information-technology (IT) original-equipment manufacturers (OEMs) from ASHRAE TC 9.9, Mission Critical Facilities, Technology Spaces and Electronic Equipment, published the first vendor-neutral temperature and humidity ranges that did not void legacy IT-equipment warranties: Thermal Guidelines for Data Processing Environments. However, this data was prepared with a focus of alignment between the IT and data-center-facilities (design and operation) industries; it was not singularly focused on the call for energy efficiency that would grow louder in subsequent years.

In 2008, as the energy-efficiency trend became more influential, the temperature and humidity ranges were expanded. Now, the third edition of Thermal Guidelines for Data Processing Environments has been released. It contains new data to help guide greater energy efficiency without voiding IT-equipment warranties.

The opportunities for compressor-less (no refrigeration) cooling never have been higher. The challenge no longer is to convince the data-center industry that energy efficiency is an important consideration. Instead, in the traditionally (and understandably) risk-averse world of mission-critical data-center operation, it is how to continue to keep things calm and minimize risk while saving energy.

Measurement Points

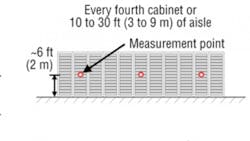

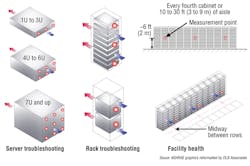

The first challenge to overcome when establishing vendor-neutral guidelines was to agree on the actual physical location of the measurement points. With data-center floor spaces becoming larger, it no longer was valid to consider overall room temperature as the design criteria. The criteria needed to focus on being more exacting from the standpoint of cooling airflow management.

The third edition of Thermal Guidelines for Data Processing Environments identifies specific measurement points at the air inlet of IT-equipment packaging (typically the front face). The subsequent temperature and humidity ranges that are stated in the publication are based on these inlet points. Further, these defined measurement points aid in the determination of the “health” of an existing facility (Figure 1).

Recommended and Allowable Envelopes

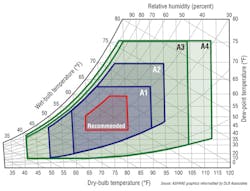

The first edition of Thermal Guidelines for Data Processing Environments introduced the concept of “recommended and allowable” environmental ranges (for temperature, humidity, maximum dew point, and maximum rate of rise) at the inlet of IT-equipment packaging. These ranges were expressed in both tabular form and the form of an enclosed envelope formed when they are plotted on a psychrometric chart, which is why the ranges sometimes are referred to in the industry as “recommend envelope” and “allowable envelope” (Figure 2).

The definitions of recommended and allowable sometimes led to the wrong conclusions being drawn on how to apply them. For reference, here are abbreviated definitions of the two ranges:

• Recommended environmental range: Facilities should be designed to achieve, under normal circumstances, ambient conditions that fall within the recommended range.

• Allowable environmental range: The allowable envelope is where IT manufacturers test their equipment to verify that it will function within those environmental boundaries.

The purpose of the recommended environmental range is to give guidance to designers and operators on the balanced combination of reliability and energy efficiency. The recommended environmental range is based on the collective IT OEMs’ expert knowledge of server-power consumption, reliability, and performance under various ambient-temperature and humidity conditions.

What often is missed is that directly below the definitions of recommended and allowable in the publication is an explanation of how to practically apply the ranges. Too often, the approach is a conservative one, with the recommended range representing the absolute boundary for the design criteria and further compounded by selection of a target condition that is toward the lower or middle of the recommended range.

This approach results in a lost opportunity to take advantage of a greater duration where ambient conditions could reduce the need for mechanical cooling. The practical application described in the publication speaks to the consequence of prolonged exposure of operating IT equipment beyond its recommended range and into its allowable range.

The overall spirit is that excursions beyond the recommended range and into the allowable range are not overly detrimental to the operation of a data center. Certainly, prolonged exposure in the allowable range can have an impact on IT-equipment reliability, but the impact neither is instantaneous nor does it represent an increase in risk that is orders of magnitude greater than being in the recommended range.

Designing a data center to accommodate and accept excursions into the allowable range under certain conditions opens up a far greater potential for energy-efficient designs and, in a surprisingly large number of cases, could be the difference between needing energy-intensive mechanical refrigeration equipment (e.g., compressors, chillers) and eliminating it completely from the design.

New Environmental Classes

There are a number of different types of IT equipment, and each has a different tolerance and sensitivity to environmental conditions, as well as a different level of reliability necessary to perform its “mission.” For example, blade servers allow for hot swaps of individual server blades and often have dual (redundant) power supplies with dual power cords from two power sources. To account for this, the first edition of Thermal Guidelines for Data Processing Environments introduced the concept of classifying IT equipment into specific classes.

Previously, there were four IT-equipment classes (Class 1 through Class 4). Two of the four classes applied specifically to the type of IT equipment used in data-center applications (classes 1 and 2), with Class 1 being the most common for IT equipment deployed in dedicated data centers and Class 2 being geared more toward smaller dedicated server rooms that may not have the same level of precision in their cooling control systems (e.g., a server room within an office building).

The third edition of Thermal Guidelines for Data Processing Environments has more data-center IT-equipment classes to accommodate different applications and priorities of IT-equipment operation. This is critical because fewer data-center IT-equipment classes force a narrower optimization, whereas each data-center needs to be optimized at a broader scale based on the data-center owner or operator’s own criteria and emphases (e.g., full-time economizer use vs. maximum focus on reliability).

The naming conventions have been updated to better delineate the different types of IT equipment. The old and new classes are now specified differently, with the previous classes 1, 2, 3, and 4 directly mapped to A1, A2, B, and C. The two new data-center classes that have been introduced are noted as A3 and A4. Each class has its own recommended and allowable environmental specifications, as summarized in Table 1, which is extracted from the more comprehensive version included in Thermal Guidelines for Data Processing Environments.

What Is the ‘X Factor’?

For the first time, there is published numerical data directly from IT OEMs that quantifies what the relative failure rate would be for equipment operating at various temperatures. Further, this information is valid for both new and legacy IT equipment, which means it can be applied to existing data centers as well as new designs.

This quantification of relative failure rates represents absolutely vital information when determining the operating conditions for a data center because it allows a better assessment of the tradeoff of risk of failure vs. energy efficiency when operating at higher temperatures. Additionally, by being a dimensionless and relative scale, it also allows assessment of the overall risk of a variable temperature range, rather than a fixed one.

Through the combination of time-at-temperature histogram plots of weather data for a given locale and the relative failure-rate X-factor table within Thermal Guidelines for Data Processing Environments, a system designer quickly can discover whether economizer-centric operation that is more directly linked to outdoor ambient conditions represents more or less risk than operation at a fixed temperature.

Somewhat surprisingly, for almost all locations around the world, there is a negligible difference between operating with a varying inlet temperature based on outdoor conditions compared to a fixed inlet temperature of 68°F (20°C). In many places, varying inlet temperature based on outdoor conditions actually represents a lower risk of failure.

The adoption of these vendor-neutral guidelines could be the single biggest catalyst to the growth of chillerless data centers, which represents both a capital-cost reduction and an operating-cost reduction and makes the financial side of the tradeoff more attractive.

New Liquid-Cooling Classes

Also new in the third edition of Thermal Guidelines for Data Processing Environments is the introduction of liquid-cooling IT-equipment classes. These new classes reflect the movement by IT OEMs to provide IT equipment that has connections for liquid cooling media instead of airflow requirements at the inlet.

The environmental specifications for each liquid-cooling class include a range of supply-water temperatures as well as cooling-system architectures that can be used to achieve them. Additional information on water-flow rates and pressures, water quality, and velocity also is provided.

Five classes of IT equipment for liquid cooling have been defined, although it is stated that not all classes have IT equipment that is readily available. Table 2 summarizes the five classes and the specifications of each.

Additional Consideration of Increased Economizer Use

Operating at variable temperatures and allowing excursions into the allowable envelopes are criteria that lend themselves to the increased (if not full-time) use of economizers. Particulate and gaseous contamination becomes a more important consideration when there is an increased use of economizer systems, particularly for locations that are in close proximity to products of combustion, pollen, dirt, smoke, smog, etc.

Air quality and building materials should be checked carefully for sources of pollution and particulates, and additional filtration should be added to remove gaseous pollution and particulates, if needed. In all cases, engineering expertise should be applied when designing an economizer system for use in a data center.

Meaningful and Relevant Data

Convincing the data-center industry (owners, operators, designers, etc.) to become more energy efficient no longer is the challenge. Providing meaningful and relevant data that arms and enables the data-center industry to make informed decisions on energy-efficiency strategies is.

The third edition of Thermal Guidelines for Data Processing Environments offers credible and vendor-neutral published data that creates the opportunity to optimize energy-efficiency strategies on an individual basis to best meet the needs of the user and achieve the best total cost of ownership. Accomplishing this requires a holistic approach that considers more variables and the use of in-depth engineering assessment. This not only can save on operational expenses, but dramatically reduce capital costs.

The keys to success include holistic cooling design, a multivariable design approach, and multidiscipline designers who understand both facility cooling and IT.

A lontime member of HPAC Engineering’s Editorial Advisory Board, Don Beaty, PE, FASHRAE, is president of DLB Associates. He was the co-founder and first chair of ASHRAE Technical Committee TC 9.9 (Mission Critical Facilities, Technology Spaces and Electronic Equipment). DLB Associates is a consulting-engineering firm licensed in more than 40 states. The firm has provided design, commissioning, and operations support services for a wide variety of data-center clients, including eight of the largest Google data-center campuses worldwide.